Doing everything right: A .NET fairytale - Part 1

Developers are expected to strive for best practices in every aspect. Sure, best practice is there for a reason, and in the case of performance or security, it's no doubt the path to take. We're usually pressed for time, deadlines to hit, and in the end, the code takes the hit.

In my most recent personal project, I decided to take a different approach than I've done previously. The purpose of the projects I work on in my own time is usually to make something more convenient for myself, and I'm in a hurry to finish them. In this case, I want to take my time in an attempt to do everything right.

I also wanted to explore new technologies, meaning I had to compromise on certain aspects of the application.

The application

I'm a bit of a trading enthusiast, especially in the cryptocurrency space. I won't go into details about how trading or crypto works as that is not the point of this post. Different crypto exchanges provide different sets of features and functionalities, but I've always been looking for more. And so the journey begins!

A bit of context

Trading is all about making more money than you lose. The purpose of the functionality I want to implement is to prevent users from losing large amounts of money. A couple of features:

- Configurable 'max' amount for a single position

- Requirement for 'stop-loss' for all trades

- Market analyzers to recommend action

How?

I have a few options on how to solve this.

- Create a client that exposes only the wanted functionality and use it as a supplement to the existing clients.

- Create an entirely new client implementing all existing and new functionality.

Naturally, the second option was more appealing to me, I get to write more code, explore more technologies and get more time off from my wife (hopefully she doesn't read this).

The architecture

This application would thrive in a microservice architecture as many individual services would benefit from being scaled individually. However, microservices slow development so I want to build it as a monolith for as long as I can, with the ability to split it out easily whenever needed.

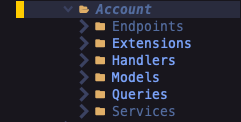

The architecture reflects this, every 'service' is categorized as a feature. Each feature implements handlers for queries and commands that the feature exposes to the mediator. I've previously written a more detailed post about MediatR and minimal APIs.

All features follow the same folder structure:

In addition, there is a Shared folder containing code that is shared between features. This will eventually be a class library and possibly be published as a nuget package.

Deep dive - Data access

I'll take a deep dive into a single feature - the user feature.

First of all, the database. I use an abstract BaseDbContext class that all other contexts inherit from.

public abstract class BaseDbContext : DbContext

{

protected abstract string DefaultSchema { get; }

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

modelBuilder.HasDefaultSchema(DefaultSchema);

}

}

The purpose of this is to override the default schema for each context.

public class UserContext : BaseDbContext

{

protected override string DefaultSchema => "User";

}

All tables in the UserContext will use the schema Ùser, e.g. User.Profiles

. If a feature other than the user feature needs its own representation of a user, it would not use the user feature's model, but instead its own.

Imagine there is a chat feature that needs a way to represent users but relies on additional information than what the user feature supply, it would create its own User model with a Chat.User table in the database. Giving each feature its own schema is a simple way to segregate the data and keep track of what belongs where. It's also easy to create an individual database for each feature in the future - further simplifying the move to microservices.

Furthermore, I use an abstract BaseContextService class that implements basic CRUD and other operations to combat boilerplate for each and every context.

public abstract class BaseContextService<TContext, TEntity> where TContext: DbContext

where TEntity: BaseEntity

{

public abstract TContext Context { get; set; }

}

All entities that are stored in the database inherit from the BaseEntity class:

public class BaseEntity

{

[DatabaseGenerated(DatabaseGeneratedOption.Identity)]

[Key]

public Guid Id { get; set; }

public bool Deleted { get; set; }

}

All entities (so far at least) have a generated ID and a soft delete flag. One could possibly discuss the effectiveness of using a Guid versus an int as ID, I ended up with Guid, for no other reason than I want my code to be consistent and do not want to expose myself to enumeration of e.g. users.

The BaseContextService implements common functionality such as FirstOrDefaultAsync

public async Task<TEntity?> FirstOrDefaultAsync(Expression<Func<TEntity, bool>> query)

{

try

{

var foundEntity = await Context.Set<TEntity>().FirstOrDefaultAsync(query);

return foundEntity is {Deleted: true} ? null : foundEntity;

}

catch (Exception e)

{

Console.WriteLine(e);

throw;

}

}

// ... more methods

NOTE: Ignore the error handling, I'm trying to figure out a good and uniform way to handle errors across the entire application and did not want to rewrite multiple times before I land on something.

Now that the BaseContextService implements the functions we want, all we need to do is create a service for each of our models and override the context.

public class UserProfileContextService : BaseContextService<UserContext, UserProfile>

{

public UserProfileContextService(IConfiguration config)

{

Context = new UserContext(config);

}

public sealed override UserContext Context { get; set; }

}

No need to implement CRUD etc for each and every table in the database.

Deep dive - Exposure

Another question I asked myself was how to properly expose data from features. I want everything inside the feature to be completely isolated from the outside, exactly like a microservice. As all features are in the same assembly, I can't actually isolate the logic, but the idea is still the same!

All features expose the relevant functions through MediatR, there are no direct dependencies on the services, models, or other logic contained in a feature. This leaves the question, how do you expose data without exposing the underlying data model? The answer: DTOs. The DTO object is a contract between the exposing and the consuming service. If the underlying data model in the service changes to handle some business logic that the consuming service does not care about, there is no need for the logic in the consuming service to change as the DTO stays the same.

MediatR runs in-process, and moving to microservices would remove the need for it. Should we move to microservices, the solution could be out-of-process events or simply a HttpClient and an API.

Example - Creating a user

We expose the ability to create users through MediatR

public record CreateUserCommand(UserRegistrationDto UserRegistration) : IRequest<UserProfileDto?>;

The CreateUserCommand record represents the Write operation that can be done. It accepts a UserRegistrationDto and returns a UserProfileDto.

The handler is as follows

public class CreateUserHandler : IRequestHandler<CreateUserCommand, UserProfileDto?>

{

private readonly IUserProfileService _userProfileService;

public CreateUserHandler(IUserProfileService userProfileService)

{

_userProfileService = userProfileService;

}

public async Task<UserProfile?> Handle(CreateUserCommand request, CancellationToken cancellationToken)

{

return await _userProfileService.RegisterUser(request.UserRegistration);

}

}

And all we need in the endpoint

private static async Task<IResult> Create(IMediator mediator, [FromBody] UserRegistrationDto userRegistration)

{

var user = await mediator.Send(new CreateUserCommand(userRegistration));

return Results.Ok(user);

}

It's clean, readable, and scalable. The CreateUserCommand and UserRegistrationDto are the only objects that are exposed outside of the feature, and the internal logic of the feature is not functionally dependent on these objects.

What is next?

This was a 'quick' introduction to the architecture, data access, and communication between features in this application. There is still much to discuss and I'll come back to it. I'm planning to keep posting about this as a series, how you might plan out a project in advance, and how it changes over time. The idea is to highlight the choices we make and adjustments we make underway as developers. Starting out with the intention to do everything right might eventually prove to be very hard. Let's see!

I intend to touch on subjects such as

- Authentication

- Testing

- Error handling

- Logging

And much more!

Anything you would like to discuss, something you disagree with, or anything else? Contact me!

Disclaimer: These are my own ideas and opinions. I do not claim that this is best practice or how you should do it. I simply aim to learn as much as possible in my journey through this landscape.